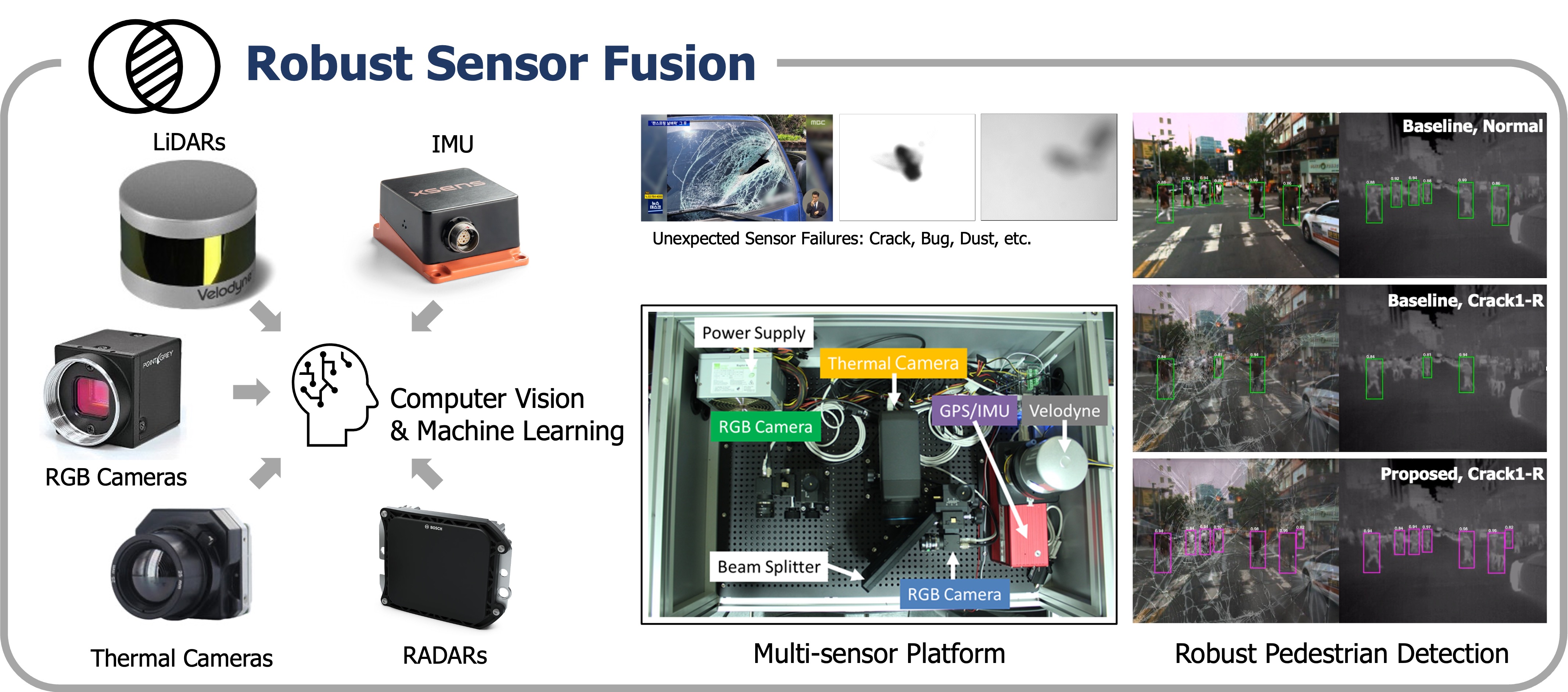

#SensorFusion #AdaptiveSensing #ReliablePerception

In autonomous driving, robust sensor fusion is the key to achieving reliable perception in complex and dynamic environments. Our lab integrates data from multiple sensors—RGB cameras, thermal infrared cameras, 3D LiDAR, and so on—to enhance the accuracy and resilience of object detection, depth estimation, and scene understanding. By leveraging adaptive fusion techniques, we ensure that autonomous systems can operate safely under varying conditions, from bright daylight to complete darkness, and in challenging scenarios such as fog, rain, and occlusions. Our research focuses on real-time, learning-based fusion strategies that dynamically adjust to sensor reliability, enabling autonomous vehicles to perceive their surroundings with higher confidence and make precise, informed decisions. Through cutting-edge AI models and continuous optimization, we strive to build perception frameworks that improve the safety, efficiency, and scalability of autonomous mobility.

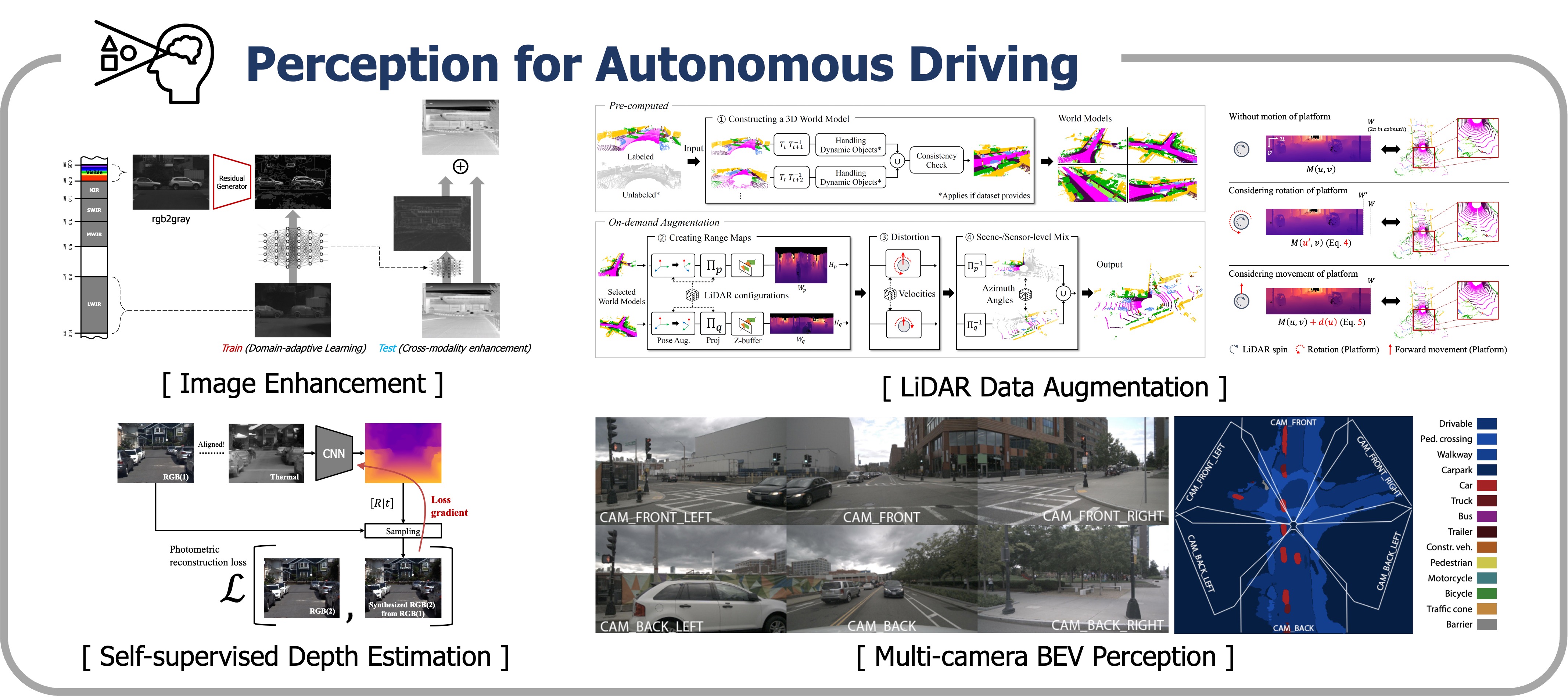

#3DSceneUnderstanding #DepthEstimation #Detection #Segmentation

Perception is the foundation of autonomous driving, enabling vehicles to understand and interpret their surroundings in real time. Our research focuses on computer vision tasks for autonomous driving such as object detection, semantic segmentation, depth estimation, and 3D scene understanding. All these tasks are to create a comprehensive and high-fidelity representation of the environment. By leveraging deep learning and computer vision techniques, we develop robust perception models that accurately detect pedestrians, vehicles, and obstacles, even in challenging conditions such as low-light environments, adverse weather, and occlusions. Our work extends beyond individual sensor modalities, incorporating multi-sensor fusion to enhance reliability and reduce uncertainty. Through continuous advancements in AI-driven perception, we aim to create safer and more intelligent autonomous systems capable of navigating real-world scenarios with precision and confidence.

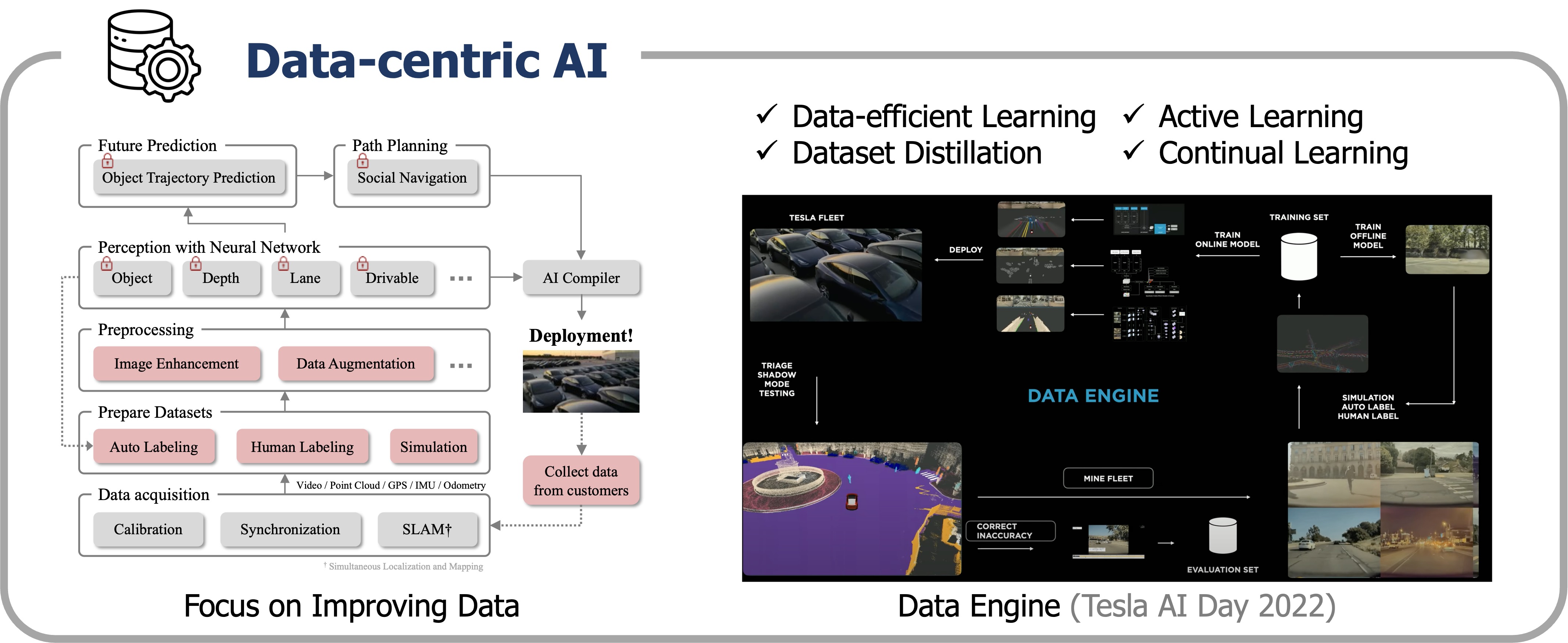

#Data-centric #DatasetDistillation #DataEngine

In the evolving landscape of autonomous driving, data-centric AI plays a pivotal role in enhancing model performance and reliability. Our lab is dedicated to developing innovative techniques such as dataset distillation, parameterization, and augmentation to refine training data, ensuring more robust and generalizable AI models. By focusing on the quality and diversity of data, we improve the learning efficiency and adaptability of perception systems in dynamic environments. Our research explores methods to automate data labeling, reduce biases, and simulate rare scenarios, creating comprehensive datasets that better reflect real-world conditions. Through data-centric strategies, we empower autonomous systems to anticipate and respond to complex driving situations, pushing the boundaries of what AI can achieve in intelligent transportation.